Given the importance of Kubernetes in today’s environment, we wanted to give you a “one stop shop” where you can learn any information you may need.

What is Kubernetes?

Simply put, Kubernetes, or K8s, is a container orchestration system. In other words, when you use K8s, a container based application can be deployed, scaled, and managed automatically.

The objective of Kubernetes container orchestration is to abstract away the complexity of managing a fleet of containers that represent packaged applications and include the code and everything needed to them them wherever they’re provisioned. By interacting with the K8s REST API, users can describe the desired state of their applications, and K8s does whatever is necessary to make the infrastructure conform. It deploys groups of containers, replicates them, redeploys if some of them fail, and so on.

Because it’s open source, a K8s cluster can run almost anywhere, and the major public cloud providers all provide easy ways to consume this technology. Private clouds based on OpenStack can also run Kubernetes, and bare metal servers can be leveraged as worker nodes for it. So if you describe your application with K8s building blocks, you’ll then be able to deploy it within VMs or bare metal servers, on public or private clouds.

Let’s take a look at the basics of how K8s works, so that you will have a solid foundation to dive deeper.

What is a Kubernetes cluster? The Kubernetes architecture

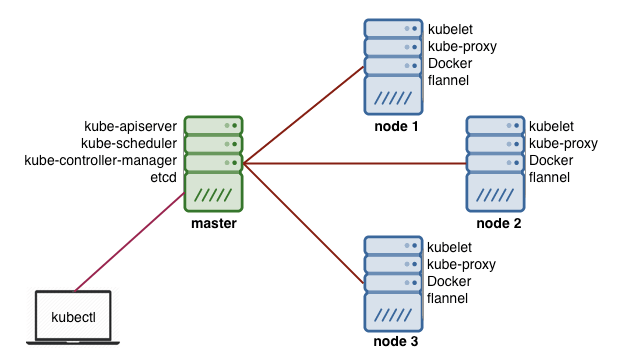

The K8s architecture is relatively simple. You never interact directly with the nodes hosting your application, but only with the control plane, which presents an API and is in charge of scheduling and replicating groups of containers named Pods. Kubectl is the command line interface that allows you to interact with the API to share the desired application state or gather detailed information on the infrastructure’s current state.

Let’s look at the various pieces.

Nodes

Each node that hosts part of your distributed application does so by leveraging Docker or a similar container technology, such as Rocket from CoreOS. The nodes also run two additional pieces of software: kube-proxy, which gives access to your running app, and kubelet, which receives commands from the k8s control plane. Nodes can also run flannel, an etcd backed network fabric for containers.

Master

The control plane itself runs the API server (kube-apiserver), the scheduler (kube-scheduler), the controller manager (kube-controller-manager) and etcd, a highly available key-value store for shared configuration and service discovery implementing the Raft consensus Algorithm.

Now let’s look at some of the terminology you might run into.

Terminology

K8s has its own vocabulary which, once you get used to it, gives you some sense of how things are organized. These terms include:

- Pods: Pods are a group of one or more containers, their shared storage, and options about how to run them. Each pod gets its own IP address.

- Labels: Labels are key/value pairs that Kubernetes attaches to any objects, such as pods, Replication Controllers, Endpoints, and so on.

- Annotations: Annotations are key/value pairs used to store arbitrary non-queryable metadata.

- Services: Services are an abstraction, defining a logical set of Pods and a policy by which to access them over the network.

- Replication Controller: Replication controllers ensure that a specific number of pod replicas are running at any one time.

- Secrets: Secrets hold sensitive information such as passwords, TLS certificates, OAuth tokens, and ssh keys.

- ConfigMap: ConfigMaps are mechanisms used to inject containers with configuration data while keeping containers agnostic of Kubernetes itself.

Why Kubernetes?

So what is K8s used for? In order to justify the added complexity that K8s brings, there need to be some benefits. At its core, a cluster manager such as k8s exists to serve developers so they can serve themselves without having to request support from the operations team.

Reliability is one of the major benefits of K8s; Google has over 10 years of experience when it comes to infrastructure operations with Borg, their internal container orchestration solution, and they’ve built K8s based on this experience. K8s can be used to prevent failure from impacting the availability or performance of your application, and that’s a great benefit.